Lewis Poche

This article is the final in our series explaining the HPL Adaptive Software Review Standards. We have identified 26 criteria categorized into six domains for evaluating software. This review framework addresses the question, "How do students learn online?" This resource aims to offer educators a more profound understanding of the online learning process for students when utilizing online adaptive programs. Read our two articles in the series,

- Data-Informed Teaching: Reading the Stories Behind the Numbers

- F.U.N. - Adaptive Software’s Role in Facilitating User Navigation

If you are already a member of the HPL Network, you can access the template for our software review standards by clicking here. If you still need to become a member, you can join for free by completing this form.

Furthermore, we have employed these standards to assess 25 commonly used adaptive software programs. To learn more about accessing these reviews or seeking guidance from our team about adaptive software, please refer to the HPL Adaptive Software Library page.

Mistakes Serve as Learning’s Stepping Stones

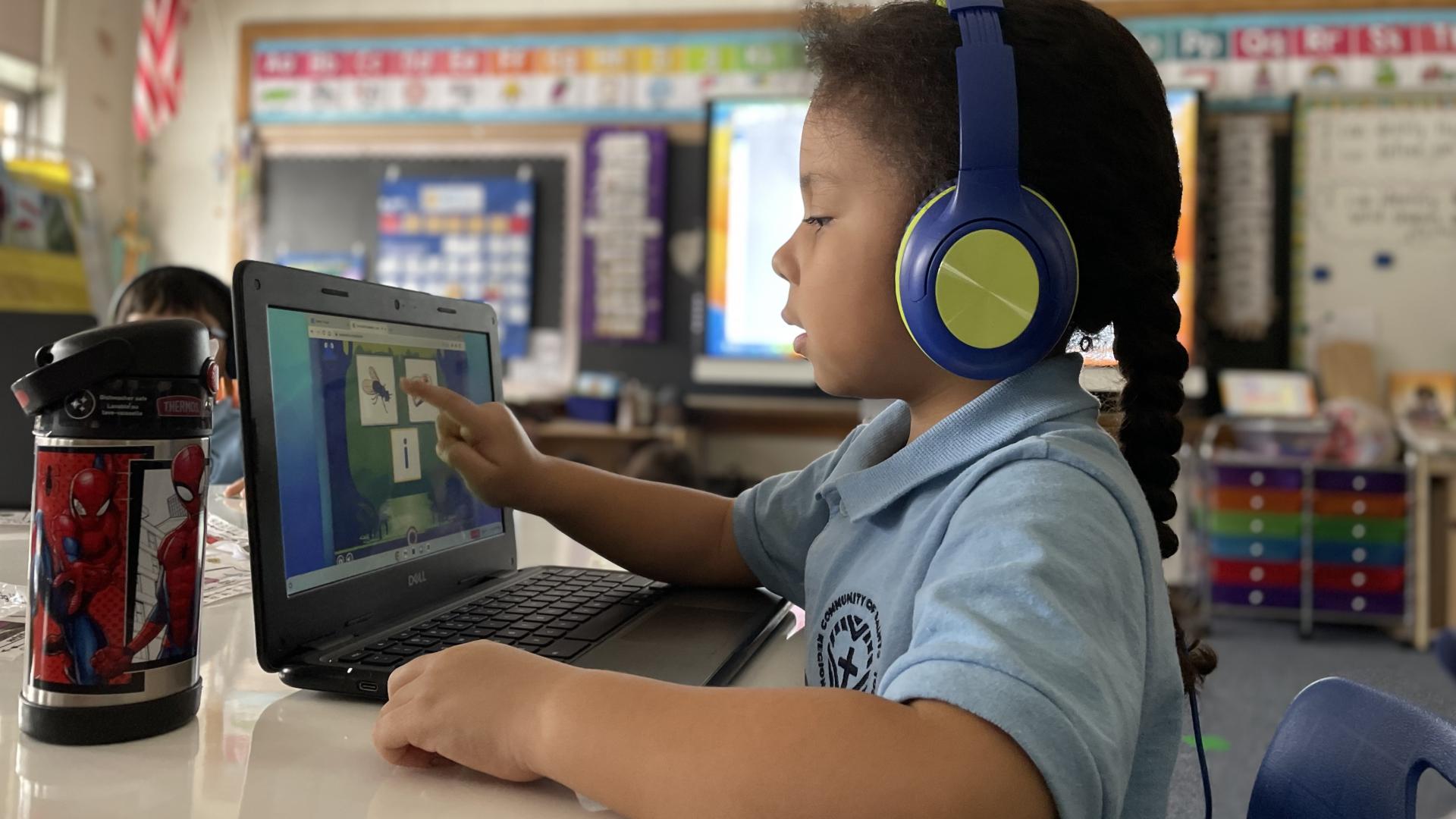

As a teacher, I took every opportunity to turn a student's incorrect answer into an opportunity for deeper discussions and learning. It's a fact: We learn by making mistakes and receiving support to get the correct answer. This article will explore how adaptive software programs can support students after they submit an incorrect answer.

Adaptive software programs are one tool teachers use to personalize the learning experience for students. I define adaptive software platforms by what they are not; they are not “one-size-fits-all” programs. In various ways, they adapt according to the input they receive from students. If students answer a string of questions correctly, the program might fast-track them to the assessment or give them a more challenging problem. If students appear to be struggling, the program might offer hints, differentiated explanations, visual demonstrations, instructional videos, or less complex questions.

Research Basis for Adaptivity

This adaptivity intends to meet students at their just-right level. The most prominent research supporting the importance of this type of personalization is Vygotsky’s research into the "Zone of Proximal Development." Vygotsky's Zone of Proximal Development suggests that learners have a sweet spot where they can learn best, right between what they can do independently and what they can't. In this zone, a more knowledgeable peer or someone who knows a bit more can help them learn and achieve more than they could alone.

Think of what that more knowledgeable peer shares as feedback. In “Effects of Feedback in a Computer-Based Learning Environment on Students’ Learning Outcomes: A Meta-Analysis,” the authors explored the impact of feedback within a computer-based setting. Their results show that feedback accompanied by an explanation “produced larger effect sizes than feedback regarding the correctness of the answer or providing the correct answer” (Van der Kleij, Feskens, & Eggen. 2015). Feedback with an explanation is more effective than feedback that either simply informs students they were incorrect or gives students the correct answer. Furthermore, the authors found that “effect sizes were negatively affected by delayed feedback timing.” In other words, the longer students wait for feedback, the worse they perform.

In sum, the pursuit of adaptivity in education aims to align with students' individual learning levels, striking a balance often referred to as their just-right level. This notion of guidance is closely related to feedback. Feedback enriched with explanations delivered promptly after a student gets a question incorrect leads to enhanced student learning outcomes.

Why Use Computers for Feedback?

One of the standout advantages of adaptive software programs is their capacity to mimic the personalized support that a teacher typically provides to students. While a teacher could theoretically offer this degree of individualized support, doing such for an entire class of students is, in practice, impossible. Furthermore, teacher can efficiently use their time conducting high-leverage small group instruction, planning relevant lessons, and giving actionable feedback rather than constantly offering one-on-one tutorial support.

What about a struggling student’s classmate? Could they serve as a more knowledgeable peer? Of course! This type of collaboration and peer support is important to promote in any blended-learning classroom. However, relying on classmates might not always be the most appropriate solution. Depending on the grade band, an adaptive software program – particularly one that offers robust support – might better explain a concept to a struggling student than a classmate (especially for really young learners). Additionally, more knowledgeable peers have their own work to complete, or there might not be any students who’ve achieved sufficient mastery to provide assistance.

In essence, blended-learning practitioners employ adaptive software to mimic the role of a more knowledgeable peer for their students. While there are limitations to computer-based adaptive support, there are additional advantages. We've pinpointed three crucial attributes characterizing a robust adaptive software's reaction to a student's incorrect response. These features are so pivotal that they comprise the first three standards within our HPL Adaptive Software Review Standards.

Effective Adaptive Software Programs are “Really Smart Unicorns”

Standards A.1, A.2, and A.3, our first three criteria, measure a software’s robustness and adaptability. For a software program to meet our definition of adaptability, support provided after a student answers a question incorrectly must be required, specific, and understandable. You can remember these three characteristics of adaptive software through a fun mnemonic: “Really Smart Unicorns.” Here’s an AI-generated image to further reinforce the concept.

Let’s explore these three standards in more depth.

Required

HPL Adaptive Software Review Standard 1: The program immediately guides the student through required learning activities (e.g., hints, videos, demonstrations, explanations, etc.) to address misconceptions. Students need to be given the opportunity to re-attempt the question.

To say that support is required means students can't "opt out" or blow past the formative feedback. To explain this standard, consider a typical homework or classwork assignment. In this approach, students complete tasks on their own and then await feedback from their teacher. This process has a few limitations.

- Immediacy: When students incorrectly answer a question, it's an opportunity for learning and course correction. Students are most invested in revising their answer so that it is correct immediately after getting a question incorrect. Yet, in a conventional approach, students miss out on this learning opportunity until much later.

- Necessity: Students must understand their mistakes and learn how to rectify them. Merely offering a dismissible pop-up message or suggesting a video might not be sufficient. This approach could work better for older learners who possess self-regulation skills and can recognize when they need extra support (for instance, high school students). Because conventional pencil-and-paper assignments are static by nature, they cannot deliver any dynamic intervention, whether required or not.

- Mastery-Oriented: Generally, students are not granted unlimited chances to retry a question. Logistically, teachers can't feasibly support such an approach to grading due to time constraints and other factors.

Enter adaptive software—a solution designed to address these limitations. When a student provides an incorrect response, adaptive software offers additional support before the student makes another attempt. This approach strives to bridge the gaps created by the traditional feedback model.

Specific

HPL Adaptive Software Review Standard 2: The program provides elaborate feedback specific to the original question that the student got wrong.

Specific feedback directly addresses the concept a student struggled on within the context of the original question. Feedback that is vague and detached from the specific learning context usually proves unhelpful.

- Generic Feedback: This type of feedback lacks precision tailored to the exact misunderstanding a student is facing. In mathematics, when a student answers a question incorrectly, the feedback should encompass additional guidance pinpointed to the specific sub-step or skill with which the student encountered difficulty. For instance, if a student errs in multi-step multiplication while attempting a one-step equation, receiving a generic video about "How to Solve a Simple One-Step Equation" would not be the optimal response. What the student truly requires is a concise review of multiplication concepts.

- Decontextualized Feedback: This form of feedback doesn't consider the particular context in which the error occurred. The context of a question is essential in the context of reading comprehension lessons in ELA. If a student misinterprets the main idea of a text like "The Tell-Tale Heart," the feedback they receive should be directly tied to the context of that story rather than being linked to an unrelated piece of literature.

The goal is to provide specific assistance that directly addresses the areas where the student needs improvement and within the context of the question that the student got incorrect.

Understandable

HPL Adaptive Software Review Standard 3: The instructional content given to students is usable (at that student's level, and content is presented in a way they understand).

For feedback to be understandable for a student, it should be presented at their level (within their Zone of Proximal Development) and using a meaningful instructional modality. Research supports that “if [feedback] is too detailed or complex, the feedback can be useless to students. Therefore…feedback, to benefit learning, should not be overly complex” (Shute and Rahimi, 2017). In response to this, and considering the specific context of adaptive software programs, we wish to ensure that feedback is at a student's level and presented in a way that makes sense to them.

- At Their Level: For a kindergartener, providing written feedback might not be suitable, as they are still transitioning from the learning-to-read stage to “reading to learn.” This idea extends to explaining concepts at a level that matches a student's reading ability. While not all passages should be presented at a student's reading level (as this might hinder their progress), the best practice is to offer tailored responses when students independently engage with the material. This approach helps them grasp the content without language becoming an obstacle.

- Meaningful Modality: In mathematics, a spectrum exists encompassing both conceptual understanding and procedural fluency. The balance between these two aspects has long been debated, often called the "math wars." Various adaptive software program developers have seemingly adopted instructional approaches situated at different points along this spectrum. Some explanations concentrate heavily on concepts, while others emphasize the procedural aspects of problem-solving. While having both is crucial, one program’s instructional approach might resonate more with your learners than another.

A general guiding principle is whether a student receiving a particular intervention would better understand what they should do differently in their next attempt. We look for appropriate - both developmentally and in terms of content - explanations of topics. This notion helps us gauge the effectiveness of explanations and interventions, ensuring they contribute meaningfully to a student's learning journey.

These three standards stand out as core criteria when evaluating adaptive software programs used in blended-learning classrooms. However, it is essential to note that every teacher, group of students, and school context is unique. These unique considerations might lead educators to prioritize certain capabilities and standards over others. However, as a generality, a program that meets these three criteria is likely to effectively support students in growing through their Zone of Proximal Development.

Since 2015, Higher-Powered Learning has empowered Catholic school teachers and leaders to leverage technology and related innovative research-based educational practices to meet the needs of all learners.

Subscribe to the "Higher-Powered Learning News" list to join us in this mission.

If you have any questions about Higher-Powered Learning, please contact our team at hpl@nd.edu.

Alliance for Catholic Education

Alliance for Catholic Education